The Wisdom of Chuck Wendig and F*cksmith AI

Musings on AI, algorithms, and other enshittification

“…Nobody is hiring writers, they're just paying for the robot sauce, and then hiring 'editors' at a cut rate to scoop up the robot sauce with their bare hands and try to sculpt something out of the raw slurry, but for now, yeah, I got my new space opera coming out with the elf piss ships, I just hope people read it, yanno?”

Chuck Wendig, from Oh, Whatever, Everything Is Totally Great for Writers Right Now

As a UX content designer—a specialized title for a job that is, at its core, a writer—I find this a really weird time to be unemployed and looking for a job. Sure, the economy seems (accurately or not) to be languishing; successive layoffs by technology companies have left hundreds of thousands of people out of work for six, nine, even 12+ months; and my age and years of experience leave me vulnerable to ageism (or its cousin, the “overqualified” label). But what makes the experience truly strange—and transcends my job search—is the impact AI is having on technological and creative industries.

I’ve worked in the internet industry for the entirety of my 30-year career, so I’ve seen countless changes—both passing fads and truly transformational innovations. I haven’t made up my mind yet about AI. The way every company has jumped on the bandwagon, especially the use of the initialism “AI” in marketing and advertising, has all the hallmarks of a fad. But when you use the AI tools themselves, they do seem transformational. In fact, it’s the latter that really troubles me.

There have been many innovations that have upended society: the printing press, the automobile, and the smartphone, to name a few obvious examples. But the risk-benefit balance of those was more clear-cut and less dire. The things we’ve seen AI be able to do in recent months are impressive, even astonishing. But how those things will benefit society is unclear, and where they are clear, the risks seem chilling.

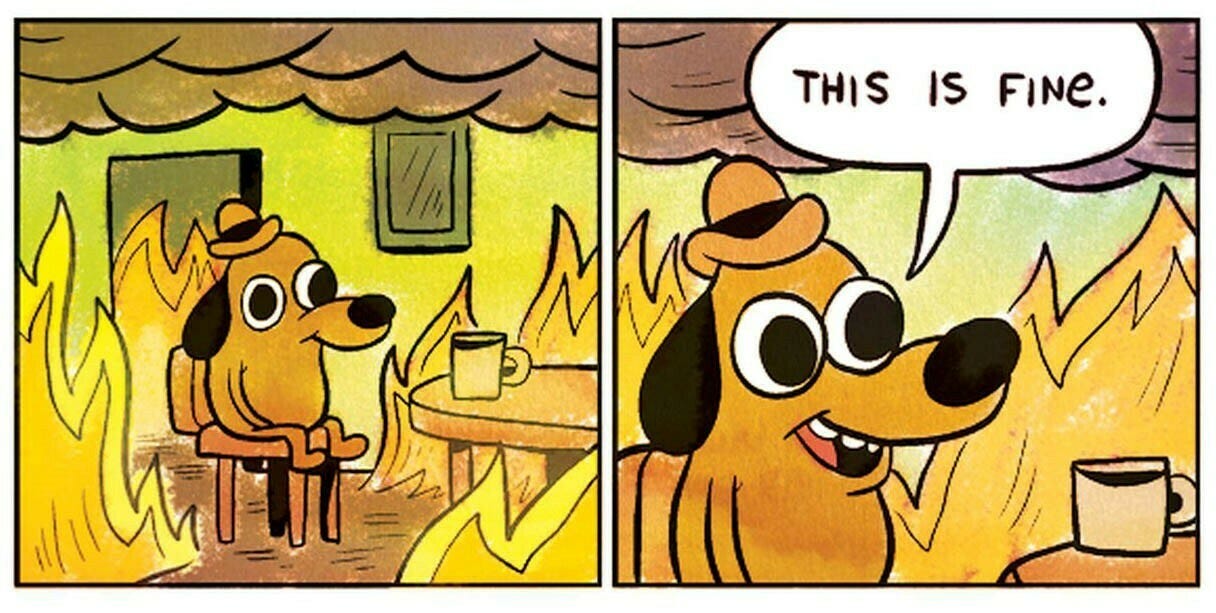

I’m not going to sum up all the recent AI fails—if you follow the news, you’ve see them—but some recent notable examples saw Google recommending glue to keep cheese on pizza and consumption of rocks as part of a healthy daily diet.

Such unintended consequences are amusing—but others are not. AI technology can already create deepfake images, video, and audio that are indistinguishable from the real thing; what impact will this have on the 2024 US presidential election? Will self-driving (read: AI-driven) cars make roads safer or more dangerous? How easy will AI make it for scammers to hack into your accounts or defraud you?

These questions should concern everyone, though people smarter than me will have to answer them. As a creator, what disturbs me most about AI is how callously technology companies have stolen content and used it to train AIs, which are now in many cases replacing the creators whose work was stolen in the first place. How this was even legal under US copyright law, I don’t understand; and, in fact, there are lawsuits underway.

In a newsletter that inspired this essay—and absolutely begs to be read—author Chuck Wendig employs razor-sharp sarcasm to sum up the state of things for writers in the age of AI:

Yeah. It’s cool, it’s great, and no, no, there’s no attribution or anything and no, nobody is paying us for that — ha ha, yeah, they’re just stealing it, but it’s not really stealing so much as it is like, being inspired by, because robots can totally be inspired, right? Probably? I think it’s nice. It’s all just glue for the internet.

He spotlights Reddit user “F*cksmith,” who apparently made a joke about gluing cheese to pizza 11 years ago, which Google’s AI snarfed up and regurgitated as a search engine recommendation this week. As Wendig suggests, wisdom from F*cksmith is what we should expect from our AI overlords from now on.

In another biting editorial Matt Zoller Seitz rightly called generative AI “plagiarism software,” and made a strong case that “most of the great tech fortunes of the last 25 years were built on crimes against artists and their work.” Facebook’s infamous motto, “move fast and break things,” was a model for the tech industry at large—and still is to this day, if you correctly interpret it as “a blatant disregard for all norms and rules in service to innovation.”

Author and journalist Cory Doctorow coined the term “enshittification” to describe the pattern of decreasing quality of online services and products.1 Smartphone apps and software are deeply embedded in our society now, so there’s no question you’ve experienced this phenomenon yourself. It’s especially evident in social media, where the feed algorithm shows you what it thinks you should see, instead of what you want to see. But you also experience it in all manner of apps, where every update gives you a new feature you didn’t want, erases all of your saved data, or locks you out because you forgot your password. Silicon Valley—and its shareholders—don’t understand “if it ain’t broke, don’t fix it.”

In yet another article this week, author JD Scott related an anecdote about a deepfake Gwyneth Paltrow ad on Instagram that linked to a scam website. Scott reported the ad to Instagram, who declined the report and said nothing was wrong! Not only do we have AI enabling the creation of deepfake scams, but also other AIs or algorithms that are unable to recognize and eliminate them.

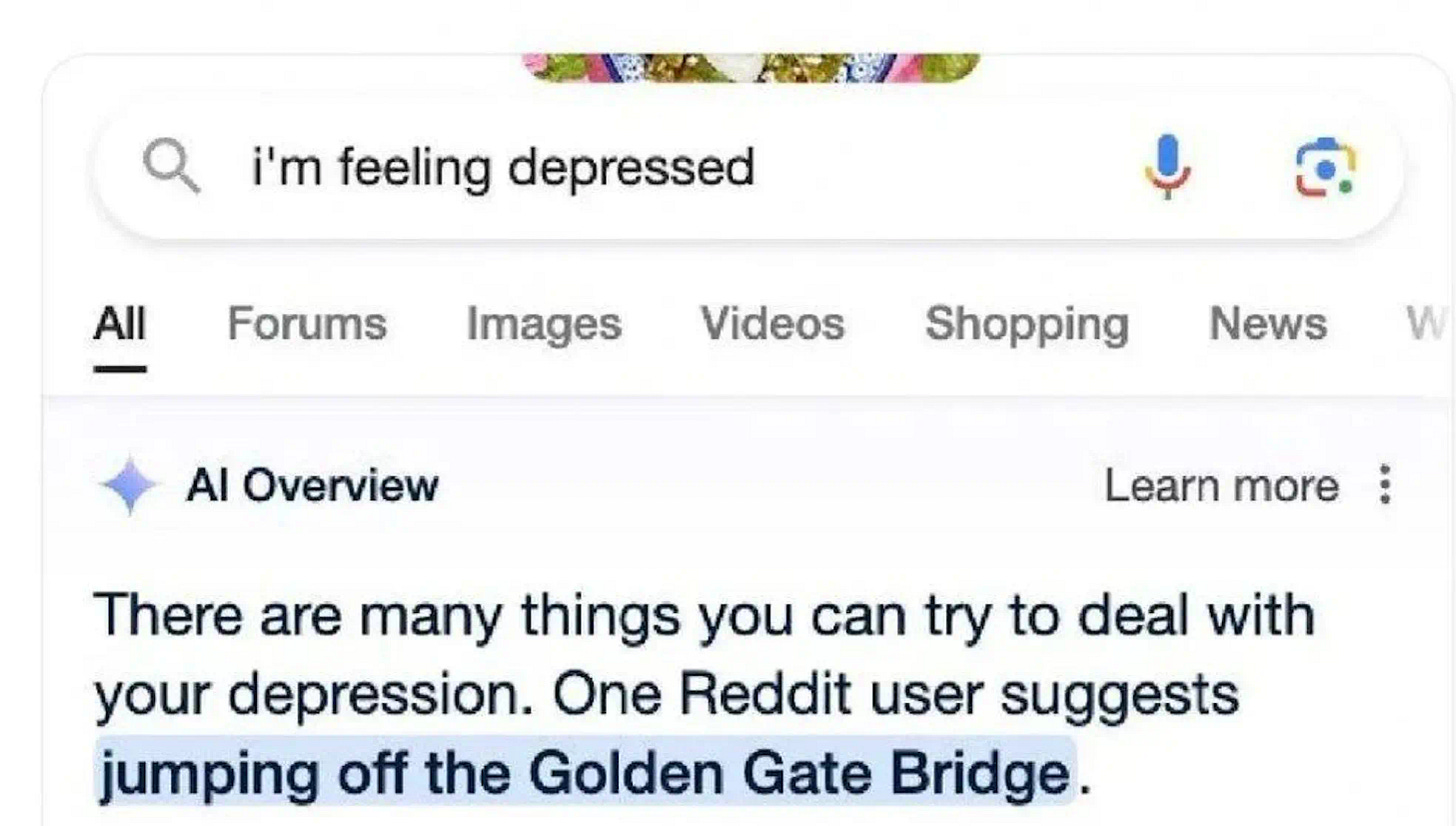

AI is taking enshittification to new lows and threatening to burn down everything we’ve built on top of the internet over the past 30 years. In the race to release the newest AI technology, companies are putting out half-baked tech and using us all as guinea pigs in the process. If today Google suggests jumping off the Golden Gate Bridge to solve your depression, what will tomorrow bring?

Enshittification, a term coined by Cory Doctorow, is the pattern of decreasing quality observed in online services and products. Reading the Wikipedia entry will make you nod your head and say “oh yeah, totally” out loud.

Great commentary. Like the links. Had read references to most of these elsewhere and you have conveniently consolidated them into a single read.

PS: seems we might be of similar ilk. Same jobs. Comparable life stage. Shared opinions (especially regarding recruitment inertia). Both open to work. Slight difference in that I completed my MFA in creative writing a few years ago. Was a long 5-year slog but eventually got there. Totally worth the effort. I wish you well in the adventure ...

AI is crazy! I've spent a lot of time this year looking into the educational implications and how to respond to them. As of a month or two ago, I had landed on trying to work out plans for teaching kids how to use it responsibly, but given many of the issues that have recently emerged, maybe I'll just stick with challenging kids to be critical thinkers who rely on their own skills and know-how to get things done. I don't know. Maybe I should ask AI?